Framework

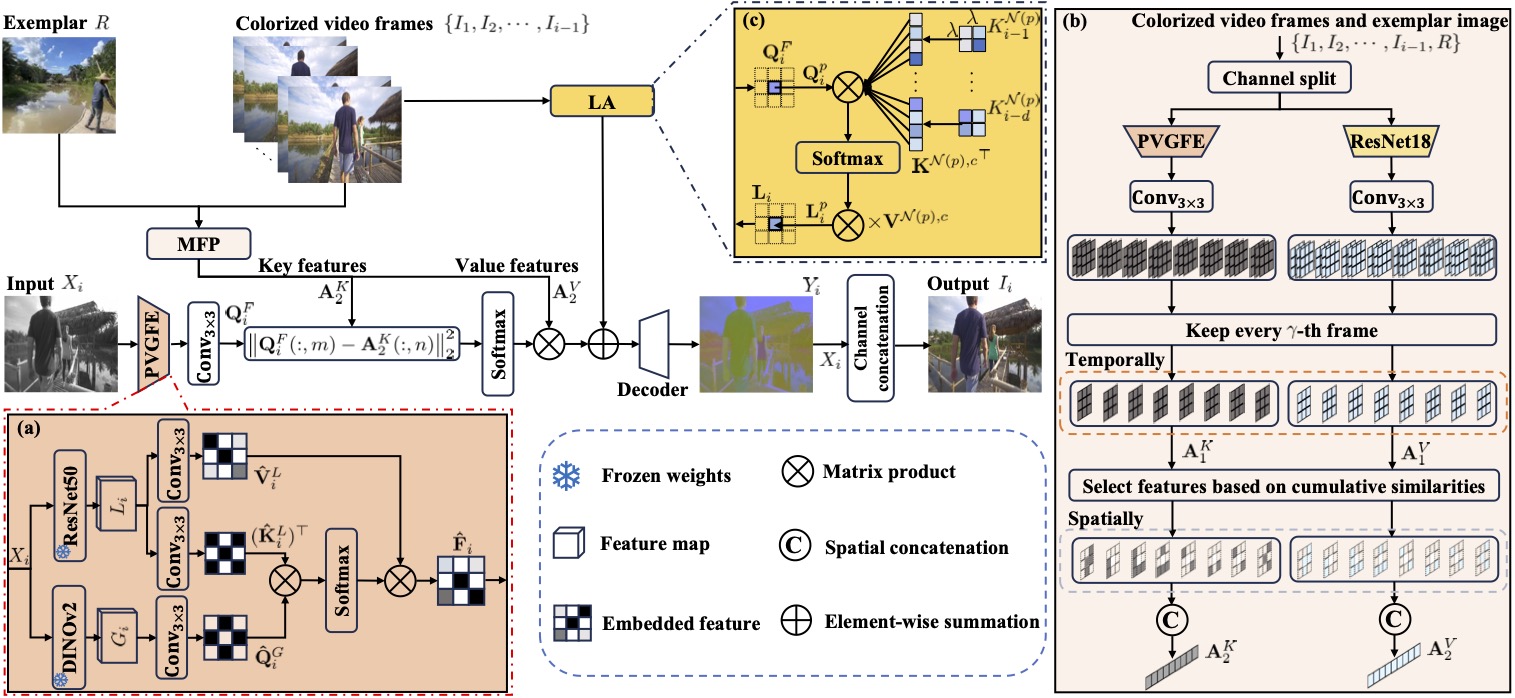

Our goal is to develop an effective and efficient video colorization method to restore high-quality videos with low GPU memory requirements. The proposed ColorMNet contains a large-pretrained visual model guided feature estimation (PVGFE) module to extract spatial features from each frame, a memory-based feature propagation (MFP) module that is able to adaptively explore the tem- poral features from far-apart frames, and a local attention (LA) module that is used to explore the similar contents from adjacent frames for better spatial and temporal feature utilization.

An overview of the proposed ColorMNet. The core components of our method include: (a) large-pretrained visual model guided feature estimation (PVGFE) module, (b) memory-based feature propagation (MFP) module and (c) local attention (LA) module.